2.2. pyopus.evaluator.performance — System performance evaluation¶

System performance evaluation module (PyOPUS subsystem name: PE)

A system description module is a fragment of simulated system description. Usually it corresponds to a file or a section of a library file.

Performance measure ordering is a list of performance measure names that defines the order of performance measures.

The heads data structure provides the list of simulators with available system description modules. The analyses data structure specifies the analyses that will be performed by the listed simulators. The corners data structure specifies the corners across which the systems will be evaluated. The measures data structure describes the performance measures which are extracted from simulation results.

The heads data structure is a dictionary with head name for key. The values are also dictionaries describing a simulator and the description of the system to be simulated with the following keys:

simulator- the name of the simulator to use (see thepyopus.simulator.simulatorClass()function`for details on how this name is resolved to a simulator class) or a simulator classsettings- a dictionary specifying the keyword arguments passed to the simulator object’s constructormoddefs- definition of system description modulesoptions- simulator options valid for all analyses performed by this simulator. This is a dictionary with option name for key.params- system parameters valid for all analyses performed in this simulator. This is a dictionary with parameter name for key.

The definition of system description modules in the moddefs dictionary

member are themselves dictionaries with system description module name for key.

Values are dictionaries using the following keys for describing a system

description module

file- file name in which the system description module is describedsection- file section name where the system description module description can be bound

Specifying only the file member translates into an .include simulator

input directive (or its equivalent). If additionally the section member is

also specified the result is a .lib directive (or its equivalent).

The analyses data structure is a dictionary with analysis name for key. The values are also dictionaries describing an analysis using the following dictionary keys:

head- the name of the head describing the simulator that will be used for this analysismodules- the list of system description module names that form the system description for this analysisoptions- simulator options that apply only to this analysis. This is a dictionary with option name for key.params- system parameters that apply only to this analysis. This is a dictionary with parameter name for key.saves- a list of strings which evaluate to save directives specifying what simulated quantities should be included in simulator’s output. See individual simulator classes in thepyopus.simulatormodule for the available save directive generator functions.command- a string which evaluates to the analysis directive for the simulator. See individual simulator classes in thepyopus.simulatormodule for the available analysis directive generator functions. If set toNonethe analysis is a blank analysis and does not invoke the simulator. Dependent measures are computed from the results of blank analyses which include only the values of defined parameters and variables.

The environment in which the strings in the saves member and the string in

the command member are evaluated is simulator-dependent. See individual

simulator classes in the pyopus.simulator module for details.

The environment in which the command string is evaluated has a member

named param. It is a dictionary containing all system parameters defined

for the analysis. It also contains all variables that are passed at evaluator

construction (passed via the variables argument). The variables are also

available during save directive evaluation. If a variable name conflicts with

a save directive generator function or is named param the variable is

not available.

The measures data structure is a dictionary with performance measure name for key. The values are also dictionaries describing individual performance measures using the following dictionary keys

analysis- the name of the analysis that produces the results from which the performance measure’s value is extracted. If this is a blank analysis the measure is a dependent measure and can be computed from the values of other measures).corners- the list of corner names across which the performance measure is evaluated. If this list is omitted the measurement is evaluated across all suitable corners. The list of such corners is generated in the constructor and stored in theavailableCornersForMeasuredictionary with measure name as key. Measures that are dependencies for other measures can be evaluated across a broader set of corners than specified bycorners(if required).expression- a string specifying a Python expression that evaluates to the performance measure’s value or a Python script that stores the result in a variable baring the same name as the performance measure. An alternative is to store the result of a script in a variable named__result.script- a string specifying a Python script that stores the performance measure’s value in a variable named__result. The script is used only when noexpressionis specified. This is obsolete. Useexpressioninstead.vector- a boolean flag which specifies that a performance measure’s value may be a vector. If it isFalseand the obtained performance measure value is not a scalar (or scalar-like) the evaluation is considered as failed. Defaults toFalse.components- a string that evaluates to a list of names used for components when the result of a measure is a vector. It is evaluated in an environment where variables passed via the variables argument of the constructor are available. If the resulting list is too short numeric indexes are used as component names that are not defined by the list.depends- an optional name list of measures required for evaluation of this performance measure. Specified for dependent performance measures.

If the analysis member is a blank analysis the performance measure is a

dependent performance measure and is evaluated after all other (independent)

performance measure have been evaluated. Dependent performance measures can

access the values of independent performance measures through the result

data structure.

expression and script are evaluated in an environment with the

following members:

Variables passed at construction via the variables argument. If a variable conflicts with any of the remaining variables it is not visible.

m- a reference to thepyopus.evaluator.measuremodule providing a set of functions for extracting common performance measures from simulated responsenp- a reference to the NumPy moduleparam- a dictionary with the values of system parameters that apply to the particular analysis and corner used for obtaining the simulated response from which the performance measure is being extracted.Accessor functions provided by the results object. These are not available for dependent measures. See classes derived from the

pyopus.simulator.base.SimulationResultsclass. The accessor functions are returned by the results object’sdriverTable()method.result- a dictionary of dictionaries available to dependent performance measures only. The first key is the performance measure name and the second key is the corner name. The values represent performance measure values. If a value isNonethe evaluation of the independent performance measure failed in that corner.cornerName- a string that reflects the name of the corner in which the dependent performance measure is currently under evaluation. Not available for independent performance measures.

A corner definition is a synonym for a pair of a corner name and a head name for which we define a list of modules and parameters. A definition must be unique.

The corners data structure is a dictionary. Three types of key are allowed.

corner_name - defines a corner for all defined heads. This results in as many corner definitions as there are heads.

corner_name, head_name - defines a corner for the specified head This results in one corner definition.

corner_name, (head_name1, head_name2, …) - defines a corner for the specified heads. This results in as many corner definitions as there are head names in the tuple.

Values are dictionaries describing individual corners using the following dictionary keys:

modules- the list of system description module names that form the system description in this cornerparams- a dictionary with the system parameters that apply only to this corner

The corners data structure can be omitted by passing None to the PerformanceEvaluator class constructor. In that case a corner named

‘default’ with no modules and no parameters is defined for all heads.

-

class

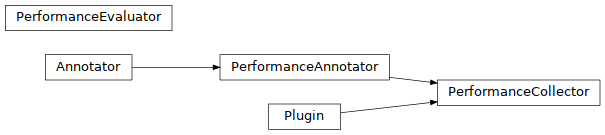

pyopus.evaluator.performance.PerformanceAnnotator(performanceEvaluator)[source]¶ A subclass of the

Annotatoriterative algorithm plugin class. This is a callable object whose job is toproduce an annotation (details of the evaluated performance) stored in the performanceEvaluator object

update the performanceEvaluator object with the given annotation

Annotation is a copy of the

resultsmember of performanceEvaluator.Annotators are used for propagating the details of the cost function from the machine where the evaluation takes place to the machine where the evaluation was requested (usually the master).

-

class

pyopus.evaluator.performance.PerformanceCollector(performanceEvaluator)[source]¶ A subclass of the

Pluginiterative algorithm plugin class. This is a callable object invoked at every iteration of the algorithm. It collects the summary of the evaluated performance measures from theresultsmember of the performanceEvaluator object (member of thePerformanceEvaluatorclass).This class is also an annotator that collects the results at remote evaluation and copies them to the host where the remote evaluation was requested.

Let niter denote the number of stored iterations. The results structures are stored in a list where the index of an entry represents the iteration number. The list can be obtained from the

performancemember of thePerformanceCollectorobject.Some iterative algorithms do not evaluate iterations sequentially. Such algorithms denote the iteration number with the

indexmember. If theindexis not present in the iterative algorithm object the internal iteration counter of thePerformanceCollectoris used.If iterations are not performed sequentially the performance list may contain gaps where no valid results structure is found. Such gaps are denoted by

None.

-

class

pyopus.evaluator.performance.PerformanceEvaluator(heads, analyses, measures, corners=None, params={}, variables={}, activeMeasures=None, cornerOrder=None, paramTransform=None, fullEvaluation=False, storeResults=False, resultsFolder=None, resultsPrefix='', debug=0, cleanupAfterJob=True, spawnerLevel=1)[source]¶ Performance evaluator class. Objects of this class are callable. The calling convention is

object(paramDictionary)where paramDictionary is a dictionary of input parameter values. The argument can also be a list of dictionaries containing parameter values. The argument can be omitted (empty dictionary is passed).heads, analyses, measures, and corners specify the heads, the analyses, the corners, and the performance measures. If corners are not specified, a default corner named

defaultis created.activeMeasures is a list of measure names that are evaluated by the evaluator. If it is not specified all measures are active. Active measures can be changed by calling the

setActiveMeasures()method.cornerOrder - specified the order in which corners should be listed in the output. If not specified a corner ordering is chosen automarically. The corner order is stored in the cornerOrder member.

fullEvaluation - By default only those corner,analysis pairs are evaluated where at least one independent measure must be computed. Result files for evalauting dependent measures are generated only for corners where at least one independent measure is evaluated. When fullEvaluation is set to

Trueall analyses across all available corners (for the analysis’ head) are performed. Result files for dependent measure evaluation are generated for all available corners across all heads.storeResults - enables storing of simulation results in pickle files. Results are stored locally on the machine where the corresponding simulator job is run. The content of a results file is a pickled object of class derived from

SimulationResults.resultsFolder - specifies the folder where the results should be stored. When set to

Nonethe results are stored in the system’s temporary folder.resultsPrefix - specifies the prefix for the results file names.

A pickle file is prefixed by resultsPrefix followed by an id obtained from the

misc.identify.locationID()function, job name, and an additional string that makes the file unique.The complete list of pickle files across hosts is stored in the

resFilesmember which is a dictionary. The key to this dictionary is a tuple comprising the host identifier (derived from thepyopus.parallel.vm.HostIDclass) and a tuple comprising corner name and analysis name. Entries in this dictionary are full paths to pickle files. If files are stored locally (on the host where the hostPerformanceEvaluatorwas invoked) the host identifier isNone.Pickle files can be collected on the host that invoked the

PerformanceEvaluatorby calling thecollectResultFiles()method or deleted by calling thedeleteResultFiles()method.params is a dictionary of parameters that have the same value every time the object is called. They should not be passed in the paramDictionary argument. This argument can also be a list of dictionaries (dictionaries are joined to obtain one dictionary).

variables is a dictionary holding variables that are available during every performance measure evaluation. This can also be a list of dictionaries (dictionaries are joined).

If debug is set to a nonzero value debug messages are generated at the standard output. Two debug levels are available (1 and 2). A higher debug value results in greater verbosity of the debug messages.

Objects of this class construct a list of simulator objects based on the heads data structure. Every simulator object performs the analyses which list the corresponding head under

headin the analysis description.Every analysis is performed across the set of corners obtained as the union of

cornersfound in the descriptions of performance measures that list the corresponding analysis as theiranalysis.The system description for an analysis in a corner is constructed from system description modules specified in the corresponding entries in corners, and analyses data structures. The definitions of the system description modules are taken from the heads data structure entry corresponding to the

headspecified in the description of the analysis (analysis data structure).System parameters for an analysis in a particular corner are obtained as the union of

the input parameters dictionary specified when an object of the

PerformanceEvaluatorclass is calledthe

paramsdictionary specified at evaluator construction.the

paramsdictionary of the heads data structure entry corresponding to the analysisthe

paramsdictionary of the corners data structure entry corresponding to the cornerthe

paramsdictionary of the analyses data structure entry corresponding to the analysis

If a parameter appears across multiple dictionaries the entries in the input parameter dictionary have the lowest priority and the entries in the

paramsdictionary of the analyses have the highest priority.A similar priority order is applied to simulator options specified in the

optionsdictionaries (the values from heads have the lowest priority and the values from analyses have the highest priority). The only difference is that here we have no options separately specified at evaluator construction because simulator options are always associated with a particular simulator (i.e. head).Independent performance measures (the ones with

analysisnot equal toNone) are evaluated before dependent performance measures (the ones withanalysisset toNone).The evaluation results are stored in a dictionary of dictionaries with performance measure name as the first key and corner name as the second key.

Noneindicates that the performance measure evaluation failed in the corresponding corner.Objects of this type store the number of analyses performed in the analysisCount member. The couter is reset at every call to the evaluator object.

A call to an object of this class returns a tuple holding the results and the analysisCount dictionary. The results dictionary is a dictionary of dictionaries where the first key represents the performance measure name and the second key represents the corner name. The dictionary holds the values of performance measure values across corners. If some value is

Nonethe performance measure evaluation failed for that corner. The return value is also stored in the results member of thePermormanceEvaluatorobject.Lists of component names for measures that produce vectors are stored in the componentNames member as a dictionary with measure name for key.

Simulator input and output files are deleted after a simulator job is completed and its results are evaluated. If cleanupAfterJob is

Falsethese files are not deleted. Consequently they accumulate on the harddrive. Call thefinalize()method to remove them manually. Note this not only cleans up all intermediate files, but also shuts down all simulators.If spawnerLevel is not greater than 1, evaluations are distributed across available computing nodes (that is unless task distribution takes place at a higher level). Every computing node evaluates one job group. See the

cooperativemodule for details on parallel processing. More information on job groups can be found in thesimulatormodule.-

collectResultFiles(destination, prefix='', move=True)[source]¶ Result files are always stored locally on the host where the corresponding simulator job was run. This function copies or moves them to the host where the

PerformanceEvaluatorobject was called to evaluate the circuit’s performance.The files are stored in a folder specified by destination. destination must be mounted on all workers and must be in the path specified by the

PARALLEL_MIRRORED_STORAGEenvironmental variable if parallel processing across multiple computers is used.If destination is a tuple it is assumed to be an abstract path returned by

pyopus.parallel.cooperative.cOS.toAbstractPath(). Abstract paths are valid across the whole cluster of computers as long as they refer to a shared folder listed in thePARALLEL_MIRRORED_STORAGEenvironmental variable.If move is set to

Truethe original files are removed.The file name consists of prefix, corner name, analysis name, and

.pck.Returns a dictionary with (cornerName, analysisName) for key holding the corresponding result file names.

-

deleteResultFiles()[source]¶ Removes the result files from all hosts where simulation jobs were run.

-

formatResults(outputOrder=None, nMeasureName=10, nCornerName=6, nResult=12, nPrec=3)[source]¶ Formats a string representing the results obtained with the last call to this object. Generates one line for every performance measure evaluation in a corner.

outputOrder (if given) specifies the order in which the performance measures are listed.

nMeasureName specifies the formatting width for the performance measure name.

nCornerName specifies the formatting width for the corner name.

nResult and nPrec specify the formatting width and the number of significant digits for the performance measure value.

-

getAnnotator()[source]¶ Returns an object of the

PerformanceAnnotatorclass which can be used as a plugin for iterative algorithms. The plugin takes care of cost function details (resultsmember) propagation from the machine where the evaluation of the cost function takes place to the machine where the evaluation was requested (usually the master).

-

getCollector()[source]¶ Returns an object of the

PerformanceCollectorclass which can be used as a plugin for iterative algorithms. The plugin gathers performance information from theresultsmember of thePerformanceEvaluatorobject across iterations of the algorithm.

-

getComputedMeasures()[source]¶ Returns the names of all measures that are computed by the evaluator.