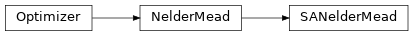

4.8. pyopus.optimizer.sanm — Unconstrained successive approximation Nelder-Mead simplex optimizer¶

Unconstrained successive approximation Nelder-Mead simplex optimizer (PyOPUS subsystem name: SANMOPT)

A provably convergent version of the Nelder-Mead simplex algorithm. The algorithm performs unconstrained optimization. Convergence is achieved by performing optimization on gradually refined approximations of the cost function and keeping the simplex internal angles away from 0. Function approximations are constructed with the help of a regular grid of points.

The algorithm was published in

- sanm

Bűrmen Á., Tuma T.: Unconstrained derivative-free optimization by successive approximation. Journal of computational and applied mathematics, vol. 223, pp. 62-74, 2009.

-

class

pyopus.optimizer.sanm.SANelderMead(function, debug=0, fstop=None, maxiter=None, reflect=1.0, expand=1.2, outerContract=0.5, innerContract=- 0.5, shrink=0.25, reltol=1e-15, ftol=1e-15, xtol=1e-09, simplex=None, lam=2.0, Lam=4503599627370496.0, c=1e-06, tau_r=2.220446049250313e-16, tau_a=1e-100)[source]¶ Unconstrained successive approximation Nelder-Mead optimizer class

Default values of the expansion (1.2) and shrink (0.25) coefficients are different from the original Nelder-Mead values. Different are also the values of relative tolerance (1e-16), and absolute function (1e-16) and side length size (1e-9) tolerance.

lam and Lam are the lower and upper bound on the simplex side length with respect to the grid. The shape (side length determinant) is bounded with respect to the product of simplex side lengths with c.

The grid density has a continuity bound due to the finite precision of floating point numbers. Therefore the grid begins to behave as continuous when its density falls below the relative(tau_r) and absolute (tau_a) bound with respect to the grid origin.

See the

NelderMeadclass for more information.-

buildGrid(density=10.0)[source]¶ Generates the intial grid density for the algorithm. The grid is determined relative to the bounding box of initial simplex sides. density specifies the number of points in every grid direction that covers the corresponding side of the bounding box.

If any side of the bounding box has zero length, the mean of all side lengths divided by density is used as grid density in the corresponding direction.

Returns the 1-dimensional array of length ndim holding the grid densities.

-

check()[source]¶ Checks the optimization algorithm’s settings and raises an exception if something is wrong.

-

continuityBound()[source]¶ Finds the components of the vector for which the corresponding grid density is below the continuity bound.

Returns a tuple (delta_prime, ndx_cont). delta_prime is the vector of grid densities where the components that are below the continuity bound are replaced with the bound. ndx_cont is a vector of grid component indices for which the grid is below the continuity bound.

-

grfun(x, count=True)[source]¶ Returns the value of the cost function approximation at x corresponding to the current grid. If count is

Falsethe cost function evaluation happened for debugging purposes and is not counted or registered in any way.

-

gridRestrain(x)[source]¶ Returns the point on the grid that is closest to x. The componnets of x that correspond to the grid directions for whch the density is below the continuity bound are left unchanged. The remaining components are rounded to the nearest value on the grid.

-

reset(x0)[source]¶ Puts the optimizer in its initial state and sets the initial point to be the 1-dimensional array or list x0. The length of the array becomes the dimension of the optimization problem (

ndimmember).The initial simplex is built around x0 by calling the

buildSimplex()method with default values for the rel and abs arguments.If x0 is a 2-dimensional array or list of size (ndim*+1) times *ndim it specifies the initial simplex.

A corresponding grid is created by calling the

buildGrid()method.The initial value of the natural logarithm of the simplex side vectors determinant is calculated and stored. This value gets updated at every simplex algorithm step. The only time it needs to be reevaluated is at reshape. But that is also quite simple because the reshaped simplex is orthogonal. The only place where a full determinant needs to be calculated is here.

-

reshape(v)[source]¶ Reshapes simpex side vectors given by v into orthogonal sides with their bounding box bounded in length by lam and Lam with respect to the grid density.

Returns a tuple (vnew, logDet, l) where vnew holds the reshaped simplex sides, logDet is the natural logarithm of the reshaped simplex’s determinant, and l is the 1-dimensional array of reshaped side lengths.

logDet is in fact the natural logarithm of the side lengths product, because the reshaped sides are orthogonal.

-

sortedSideVectors()[source]¶ Returns a tuple (vsorted, lsorted) where vsorted is an array holding the simplex side vectors sorted by their length with longest side first. The first index of the 2-dimensional array is the side vector index while the second one is the component index. lsorted is a 1-dimensional array of corresponding simplex side lengths.

-

Example file sanm.py in folder demo/optimizer/

# Optimize MGH suite with successive approximation Nelder-Mead optimizer.

from pyopus.optimizer.sanm import SANelderMead

from pyopus.optimizer.base import Reporter

from pyopus.problems.mgh import *

from numpy import array, sqrt

from platform import system

if system()=='Windows':

# perf_counter() is the most precise timer in Windows

from time import perf_counter as timer

else:

# time() is the most precise timer in Linux

from time import time as timer

from sys import stdout

# Custom reporter that prints dots

class MyReporter(Reporter):

def __init__(self, name, iterSpacing=1, concise=False, printImprovement=True):

Reporter.__init__(self)

self.name=name

self.iterSpacing=iterSpacing

self.concise=concise

self.printImprovement=printImprovement

self.fbest=None

self.lastPrintout=None

def reset(self):

fbest=None

def __call__(self, x, f, opt):

report=False

if self.fbest is None:

self.lastPrintout=opt.niter

self.fbest=f

report=True

if self.fbest>f and self.printImprovement:

self.fbest=f

report=True

if opt.niter-self.lastPrintout>=self.iterSpacing:

report=True

if report:

if self.concise:

stdout.write(".")

stdout.flush()

else:

print("%30s (%2d) iter=%-6d f=%12.4e fbest=%12.4e" % (self.name[:30], x.size, opt.niter, f, self.fbest))

self.lastPrintout=opt.niter

if __name__=='__main__':

suite=[

[ Rosenbrock() ],

[ FreudensteinAndRoth() ],

[ PowellBadlyScaled() ],

[ BrownBadlyScaled() ],

[ Beale() ],

[ JennrichAndSampson() ],

[ McKinnon() ],

[ McKinnon(), array([[0.0, 0.0], [1.0, 1.0], [(1.0+sqrt(33.0))/8.0, (1.0-sqrt(33.0))/8.0]]) ],

[ HelicalValley() ],

[ Bard() ],

[ Gaussian() ],

[ Meyer() ],

[ GulfResearchAndDevelopement() ],

[ Box3D() ],

[ PowellSingular() ],

[ Wood() ],

[ KowalikAndOsborne() ],

[ BrownAndDennis() ],

[ Quadratic(4) ],

[ PenaltyI(4) ],

[ PenaltyII(4) ],

[ Osborne1() ],

[ BrownAlmostLinear(5) ],

[ BiggsEXP6() ],

[ ExtendedRosenbrock(6) ],

[ BrownAlmostLinear(7) ],

[ Quadratic(8) ],

[ ExtendedRosenbrock(8) ],

[ VariablyDimensioned(8) ],

[ ExtendedPowellSingular(8) ],

[ Watson() ],

[ ExtendedRosenbrock(10) ],

[ PenaltyI(10) ],

[ PenaltyII(10) ],

[ Trigonometric() ],

[ Osborne2() ],

[ ExtendedPowellSingular(12) ],

[ Quadratic(16) ],

[ Quadratic(24) ],

]

results=[]

# Sub-suites of problems

# mysuite=suite[7:8] # McKinnon (alt)

# mysuite=suite[0:8] # First 8 functions

# mysuite=suite[1:2] # Freudenstein and Roth

# mysuite=[suite[3], suite[6], suite[8], suite[10], suite[16], suite[34]]

# brown, mckinnon, helical, gaussian, kowalik, trig

# mysuite=[suite[8]] # helical

mysuite=suite

for probdef in mysuite:

# Take problem function.

prob=probdef[0]

# Write a message.

print("\nProcessing: "+prob.name+" ("+str(prob.n)+") ")

# Create optimizer object.

opt=SANelderMead(prob.f, debug=0, maxiter=100000)

# Install custom reporter plugin. Print dot for 500 evaluations.

opt.installPlugin(MyReporter(prob.name, 1000, concise=True, printImprovement=False))

# If problem has a custom initial simplex, set it.

if len(probdef)==1:

opt.reset(prob.initial)

else:

# Custom simplex (for McKinnon)

opt.reset(probdef[1])

# Start timing, run, measure time.

dt=timer()

opt.run()

dt=timer()-dt

# Write number of function evaluations.

print(" %d evaluations" % opt.niter)

# Calculate initial and final gradient

gini=prob.g(prob.initial)

gend=prob.g(opt.x)

# Store results for this problem.

result={

'i': opt.niter,

'x': opt.x,

'f': opt.f,

'gi': sqrt((gini**2).sum()),

'ge': sqrt((gend**2).sum()),

't': dt

}

results.append(result)

# Print summary. Last column is initial/final gradient.

print("\n")

for i in range(0, len(mysuite)):

prob=mysuite[i][0]

print(

"%2d: %30s (%2d): ni=%6d f=%16.8e gradient: %9.1e -> %9.1e : r=%9.1e" % (

i,

prob.name[:30],

prob.initial.size,

results[i]['i'],

results[i]['f'],

results[i]['gi'],

results[i]['ge'],

results[i]['gi']/results[i]['ge'],

)

)